For years, Intel has built its performance primarily on processors. It wasn’t until the advent of the Xe architecture and the Ponte Vecchio family of accelerators, followed by the upcoming Battlemage generation, that the company showed that it wanted to fully enter the realm of parallel computing driven by graphics cards. To do this without replicating the closed CUDA model, Intel has created the Intel oneAPI platform, an ecosystem designed to link the power of processors, graphics cards and dedicated accelerators into a single unified programming model. Intel has bet on open standards so that developers are not tied to a single brand or architecture.

Intel oneAPI was created with a simple goal in mind. A developer has to write a program only once, and that program then has to run on different types of hardware, regardless of whether it’s a processor, a graphics card, or a compute accelerator. This model is based on SYCL technology, which extends C with a parallel computing model and allows the same code to be compiled for different computing chips – as long as the SYCL platform supports them. In doing so, Intel has created an opportunity to leverage GPUs similar to NVIDIA’s CUDA or AMD‘s ROCm, but with the advantage that the entire ecosystem is based on an open standard managed by the Khronos Group.

oneAPI as the foundation of Intel’s computing software

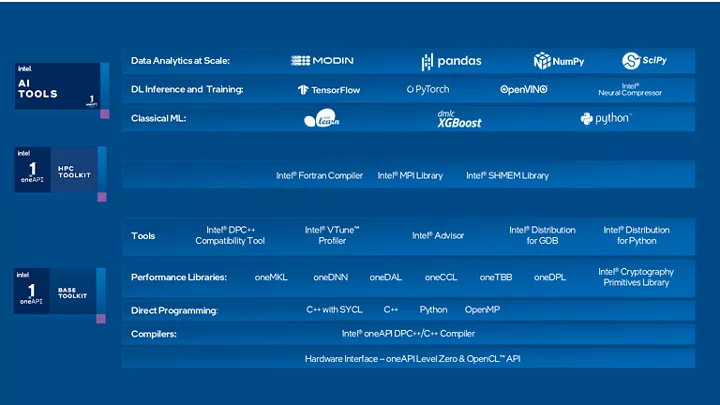

Intel oneAPI forms the core software layer that drives computation on hardware. This platform provides compilers, optimizations, libraries for scientific computing, artificial intelligence tools, and the entire runtime system. It allows you to create applications that automatically adapt to the available hardware. When a developer uses Intel oneAPI, they don’t have to worry about whether the code will run on a CPU, an integrated GPU, a dedicated Arc card, or an accelerator in the data center. The software will take care of distributing the computations according to the specific capabilities of the system.

SYCL plays the role of a parallel programming model. It allows the developer to work with threads, compute blocks and data structures that the GPU needs for massive parallelism. In this way, SYCL plays a similar role to the CUDA API in the NVIDIA ecosystem, but with the advantage of not being a closed system. A developer can write the same program for different brands of GPUs as long as they use their own implementation of SYCL, and the system takes care of the implementation details.

Xe cores as the hardware basis for graphics computing

The hardware side is provided by the Xe architecture. This contains compute units that Intel refers to as Execution Units or, in newer generations, as Xe Cores. Each of these units performs parallel computations in a similar way to CUDA cores in NVIDIA or stream processors in AMD. Performance is only generated when hundreds or thousands of Xe Cores are working at the same time, processing a lot of data at the same time.

Intel is also complementing this architecture with dedicated compute units. XMX cores accelerate the matrix work and computations that form the basis of most modern AI models. This combination of general-purpose compute units and dedicated accelerators allows both Arc graphics cards and server chips to move towards competing with solutions that use CUDA or ROCm technologies – although there is still some catching up to do in terms of market dominance.

Linking software and hardware through an open standard

Intel’s oneAPI software distributes tasks among the available devices and looks for the optimal way to exploit all the parallel computing units. The GPU handles massive batches of data, and the CPU in turn executes tasks that have a low degree of parallelism or require sequential performance. Compute accelerators for AI and HPC will take on the most demanding parts of models that require high throughput at low precision. Each part of the system gets exactly what’s most efficient for it, and the developer doesn’t have to worry about task distribution.

The future of Intel oneAPI and community reaction

Intel is developing Intel oneAPI at a rapid pace. New generations of GPUs are bringing both a higher number of Xe cores and more powerful XMX units for AI computing. Server accelerators with the Ponte Vecchio architecture and their successors show that Intel is targeting not only the consumer segment, but especially the data center and HPC market. Intel’s computing ecosystem is thus gradually growing and becoming a viable option for projects looking for open solutions beyond CUDA or ROCm.

The developer community ‘s perception of Intel oneAPI is overwhelmingly positive. In particular, it appreciates that it is an open ecosystem based on the SYCL standard, which allows writing one code for different types of hardware. Many welcome that the platform is not closed like CUDA and offers more flexibility. On the other hand, it is often mentioned that outside of the HPC environment, the adoption of oneAPI is not yet as strong as Intel intended. It is also discussed that not all GPUs outside of Intel offer a full SYCL implementation, which may affect code portability in practice.

Conclusion

Intel oneAPI, SYCL, and Xe cores form the foundation of Intel’s computing strategy. The software ecosystem provides a unified programming model for CPUs, GPUs and accelerators. SYCL’s parallel computing model enables the power of graphics cards to be harnessed efficiently and without the constraints of a single brand. Hardware Xe cores provide strong parallelism and XMX units complement AI features that are essential today. This creates a flexible and open ecosystem that grows with each generation of hardware and gives developers the ability to work with computing power in a way that goes beyond traditional GPU solutions.

Choose Intel graphics cards for modern gaming and compute workloads. Intel Arc GPUs offer a strong price-to-performance ratio and support the latest technologies.