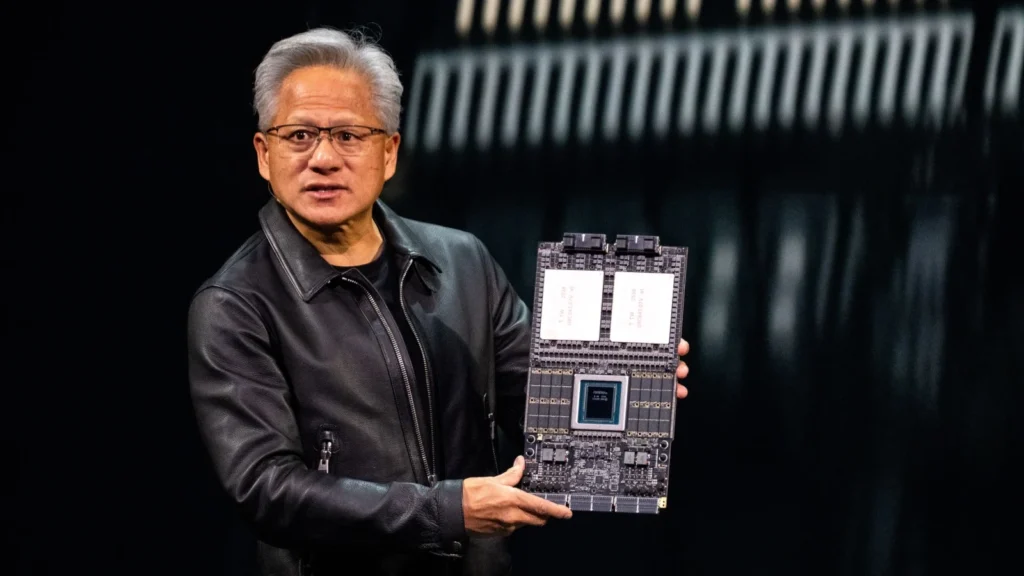

In late October at the GTC 2025 – Washington, D.C. conference, NVIDIA unveiled its most ambitious project to date – the Vera Rubin Superchip. The new platform combines the Vera CPU and Rubin GPU into a single, extremely compact module that is changing the way we look at AI and compute in data centers.

The event, held October 27-29, 2025 in downtown Washington, D.C., brought the first public unveiling of a working prototype of the next generation of superchips. On stage, CEO Jensen Huang described Vera Rubin as “a platform where the CPU and GPU work together as one consciousness” – in other words, it’s a technology that is set to push the boundaries of AI and change the future of computing as we know it today.

A compact module with a pair of GPUs and an 88-core CPU

During GTC 2025, NVIDIA physically demonstrated the Vera Rubin Superchip for the first time – an extremely compact module that combines all key components into a single computing unit.

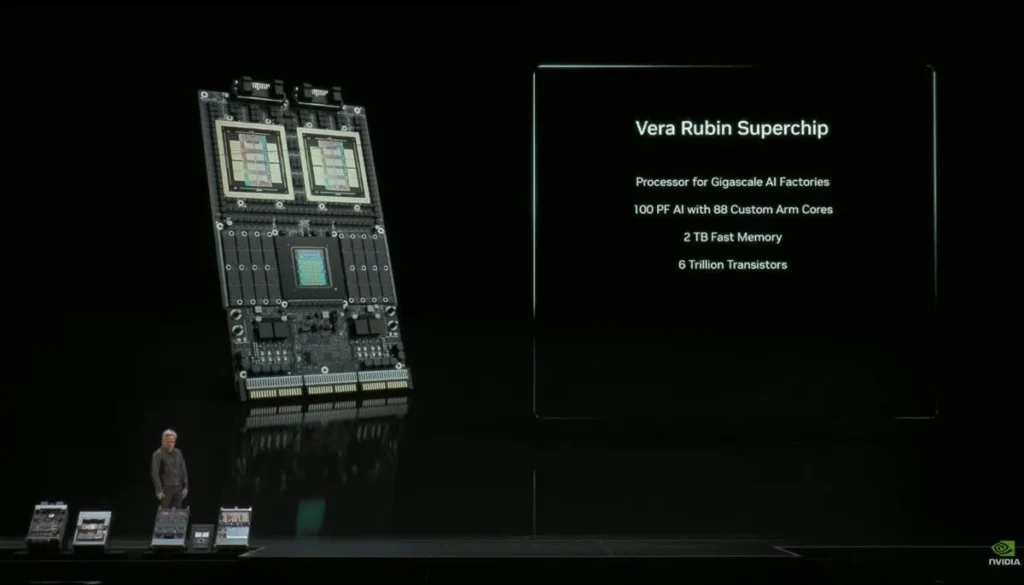

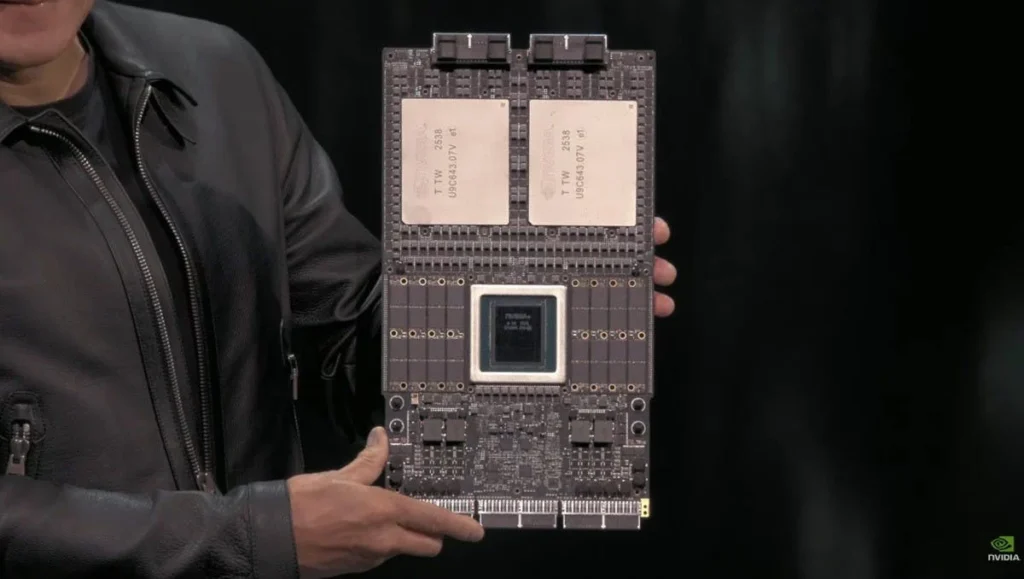

The approximately 30×20-centimeter board houses two Rubin GPUs, an 88-core Vera CPU, and eight SOCAMM memory modules that replace traditional system RAM. Each SOCAMM module functions as a separate memory block connected directly to the CPU and GPU, eliminating the need for remote RAM access and yielding extreme data processing speed.

The entire board is designed without the classic PCI Express interface. NVIDIA has used a completely new design that uses NVLink Gen6 and CXL interfaces located on the top and bottom of the module. These connectors provide direct communication between multiple Superchip modules in server racks. The result is near-zero latency between the CPU and graphics parts, a major difference from traditional GPU accelerators.

From a design perspective, this is a multi-layer board where Rubin GPUs are placed on the sides and Vera CPUs in the middle, which optimizes heat distribution and improves cooling efficiency. The module power supply is handled by a trio of power connectors on the bottom edge, which supply the entire Superchip in unison – not separately for the GPU and CPU, as was the case with older DGX platforms.

From a design standpoint, the Vera Rubin Superchip module looks like a miniature computer motherboard – only with the difference that everything here is tailored for AI computing at the data center level. With this, NVIDIA has shown the direction its future infrastructure will take: not standalone accelerators, but integrated compute modules in which the CPU and GPU work as one body.

Ruby GPU – the successor to Blackwell for AI supercomputing

Rubin GPUs themselves form the main computational foundation of the Vera Rubin Superchip module. Each GPU uses a chiplet architecture in which the compute chiplet blocks are connected by a high-speed internal network. This design allows for more efficient power distribution and heat reduction under extreme load.

Each chip contains multiple compute chiplets surrounded by HBM4 memory stacks, providing twice the throughput of HBM3e. Memory is placed directly adjacent to the compute units to minimize latency and power dissipation. This enables Ruby GPUs to process huge blocks of data without depending on external memory – ideal for training large language models, simulations, or generative AI inference.

The chips are manufactured using a 3nm process and optimized for high parallelism – simultaneous processing of multiple tasks at once. Each GPU communicates with the Vera CPU via an NVLink Gen6 interface, eliminating the need for PCIe communication. This direct CPU-GPU approach provides higher data throughput and lower response time for AI computations.

Rubin GPUs will form the basis of the new NVIDIA DGX and GB200 Rack datacenter systems, which will replace the current Blackwell-architected lineups . Production trials have been underway since October 2025, with the first production versions expected in the second half of 2026.

Vera CPU – 88 cores optimised for AI and HPC

The Vera CPU manages the operation of the entire Vera Rubin Superchip and is actively involved in computation. It features 88 cores with high levels of parallelism and integrated tensor and vector units that accelerate data processing directly in the CPU. This eliminates the need to constantly shift tasks between the CPU and GPU, significantly reducing latency.

The CPU is interfaced to a pair of Rubin GPUs via the NVLink Gen6 interface, which enables bi-directional data transfer with minimal response time. It also communicates with the other Superchip modules in the rack, creating a unified computing network in which power is dynamically redistributed according to the current workload.

The Vera CPU delivers noticeably higher efficiency and a better performance-to-power ratio than previous generations of Grace Hopper systems. Combined with the Rubin GPU, it forms a seamlessly integrated unit that processes data seamlessly and without the traditional boundaries between CPU and GPU.

Conclusion – Vera Rubin Superchip

The Vera Rubin Superchip is not just another product, but a new foundation on which NVIDIA is building the future of computing. It’s designed for AI supercomputers, cloud platforms, and data centers where, for the first time, CPUs and GPUs operate as a single organism-faster, more efficient, and smarter than ever before.

Production will begin in the second half of 2026 and deployment in data centers is expected in 2027. With this move, NVIDIA is ushering in an era where the lines between compute and intelligence are blurring – just as Jensen Huang said, ” We’re building systems that don’t just compute. They will understand.”