NVIDIA Rubin isn’t just another graphics chip, it’s an entire complex architecture that takes AI computing to another level. After Blackwell, Rubin comes as the foundation for data centers, bringing with it multiple specialized chips that work together as one system.

The NVIDIA Rubin family includes three main parts: the Rubin GPU with HBM memory designed to generate outputs, Rubin CPX, an accelerator for processing huge context, and Vera Rubin CPU, a new processor that manages tasks and complements both the GPU and CPX.

The Rubin CPX is the most attention-grabbing. This chip can process up to a million tokens of context in a single step. A token is the basic unit of input that the AI processes. It can be a piece of text (part of a word, a character), a command in code, or in multimodal models, even a section of audio or a small piece of an image. Thus, a million tokens means that the model can process content at the level of hundreds of thousands of words, entire projects or long multimedia files at once without splitting them into parts.

In practice, this means that the AI can read an entire book, a large programming project or an hour-long video at once without dividing the content into parts. This is a fundamental shift from traditional GPUs, which had to process data sequentially.

How the NVIDIA Rubin architecture works

Data processing in AI is done in two steps. First, the model reads the entire input (context phase – prefill), then it produces the result (generation phase). Classic GPUs had to handle both phases at once, causing unnecessary overload and lower efficiency.

The Rubin architecture introduces a new approach called disaggregated inference. Tasks are distributed among specialized chips:

- Rubin CPX is optimized for context retrieval and processing. Thanks to its large memory, it can prepare the entire input at once and store it in a key-value cache, which is then used by other chips.

- The Rubin GPU with HBM memory focuses primarily on the output generation phase – that is, the actual generation of text, images or video. The GPU can technically handle prefills as well, but in Ruby this task is shifted to the CPX, which handles it more efficiently.

- Vera Rubin’s CPU completes the system as a control unit. It provides organization of smaller logic tasks, coordinates data flow, and interacts with the software orchestrator.

These chips are not combined into a single processor. They reside separately in the data centers and communicate with each other via high-speed infrastructure such as NVLink and ConnectX-9 network adapters. The coordination of the entire process is managed by software (such as NVIDIA Dynamo), which ensures that the units work as a single unit.

The result is a system where each chip does what it does best. The CPX handles the huge context, the GPUs generate the outputs without bottlenecks, and CPU Vera manages how they work together. This approach delivers higher performance, better efficiency, and enables AI to work with data at a scale that was previously unattainable. ddelene in data centers, connected by high-speed infrastructure and software.

NVIDIA Rubin technology and the difference from Blackwell

NVIDIA Rubin not only delivers higher performance, but an entirely new approach to chip design. Each component is built on a different memory technology and has its own role in the system.

Rubin CPX

Uses high-capacity GDDR7 memory. This combination allows it to efficiently process huge inputs and work with mechanisms that were very slow on previous GPUs. Compared to the Blackwell generation, it can perform attention calculations up to three times faster. At the same time, it has built-in dedicated units for video, making it suitable for multimodal applications where text, video and audio are combined.

Rubin GPU

They’ve got HBM memory, which has extremely wide throughput. This means that when generating results, the chip is not hampered by data movement and can take full advantage of the compute cores. NVIDIA has so far only officially unveiled versions designed for data centers and AI computing, but according to leaks and analysis, it is expected that the Rubin architecture may form the basis of future RTX gaming cards as well.

Vera Rubin CPU

Is a completely new processor architecture. Its role is not to be faster than the GPU, but to ensure seamless collaboration between all chips. It addresses coordination and logic in data centers, relieving the GPU and CPX of tasks that would hold them back.

The difference with Blackwell

Unlike Blackwell, which was more versatile and could be deployed from gaming PCs to servers, NVIDIA Rubin is like a specialist system. Each chip just does what it’s most efficient at. The result is higher performance per watt, faster processing of large inputs, and better scalability in data centers.

Reportedly, while Rubin has architectural roots in the GB202 chip (from the RTX 5090), its layout has been significantly redesigned – from different compute blocks, to more ROP units (ROP units = the parts of the GPU that write finished pixels to the image; important for gaming, not AI computing), to new memory solutions. These details are not official yet, they are information from leaks and analysis.

The biggest change, however, is that NVIDIA Rubin can process data that Blackwell would have to split into smaller chunks. This opens up new possibilities – from training multimodal models with extreme context, to generating consistent video with plot over long hours of footage.

When NVIDIA Rubin is coming

According to the information available, the NVIDIA Rubin architecture is expected to be released in late 2026. Along with the hardware, NVIDIA is also preparing a complete software package that includes:

- NVIDIA AI Enterprise Platform,

- CUDA-X libraries,

- and the new Dynamo orchestrator, which manages inferencing in data centers and provides collaboration between Rubin CPX, Rubin GPUs, and Vera processors.

Although this will primarily be a solution for large enterprises and data centers, the benefits of this architecture will also be felt by end users. Developers will get more powerful AI tools and everyday people will get applications capable of handling entire documents, long conversations or hours of video without losing context.

Vera Rubin NVL144 CPX – the AI data centre of the future

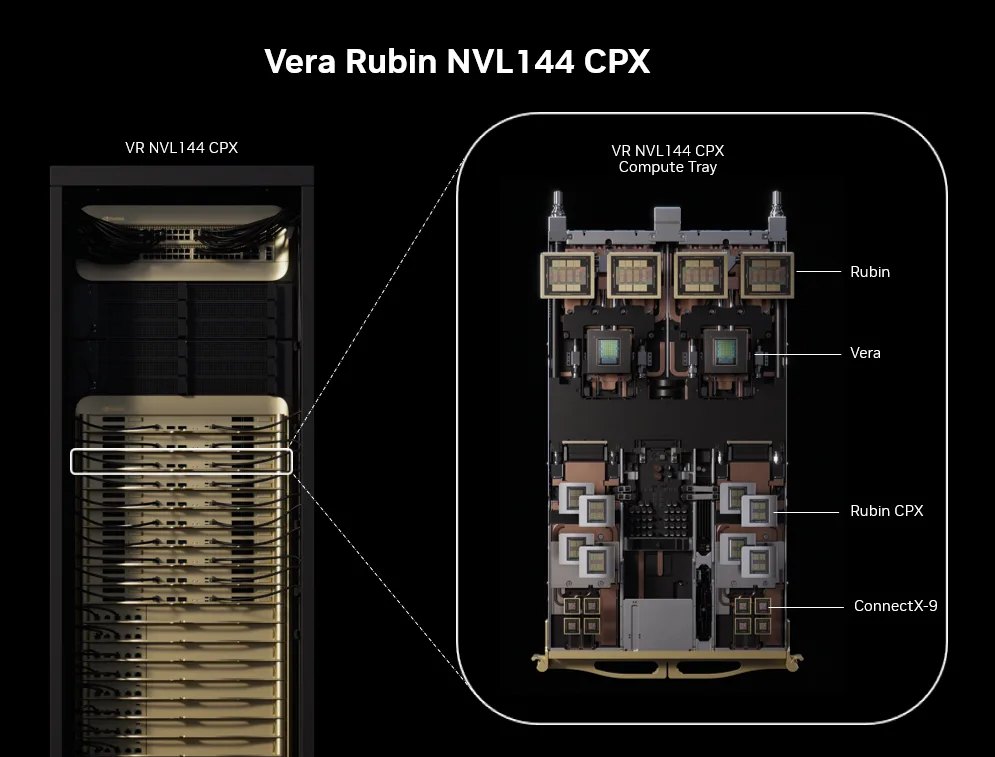

The NVIDIA Rubin architecture is not just about individual chips, but also about large server builds. The largest of these is the Vera Rubin NVL144 CPX – a rack-mount configuration that combines accelerators and processors into a single supercomputer.

Its performance reaches up to 8 exaFLOPS in NVFP4 precision. To give you an idea – 1 exaFLOPS means 10¹⁸ operations per second, or a billion billion billion calculations. One operation represents a basic mathematical operation, such as adding or multiplying decimal numbers. The entire rack has 100 TB of fast memory and a data throughput of 1.7 PB/s, which is approximately 7.5 times faster than the previous generation GB300 NVL72.

Such a system can analyze huge software codes at once, train models with extremely long context, or generate video that remains consistent over hours of recording.

What we can read from the figure

The illustration shows the entire Vera Rubin NVL144 CPX rack and its basic building block – the compute tray.

- On the left is the entire rack, with dozens of these modules nested on top of each other. The number “144” means that the assembly contains up to 144 Rubin CPX accelerators along with Vera GPUs and processors.

- On the right is a close-up of a single socket (compute tray). This module is actually a complete server where all the major parts of the Rubin architecture work together:

- Rubin GPUs with HBM memory – they generate the AI outputs,

- Vera CPU – coordinates and controls the data flow,

- Rubin CPX – handles the huge context,

- ConnectX-9 adapters – provide superfast interconnection between modules and racks.

Thus, each compute tray is a separate computing unit. When multiple of them are combined in a single rack, the result is a system that functions as one huge AI supercomputer with exaFLOPS-class performance.

Conclusion

The NVIDIA Ruby architecture is not just the next step in the evolution of graphics chips, but a new era in AI computing. By dividing tasks between dedicated chips – CPX, GPU, and CPU Vera – it delivers a solution that is more powerful, more economical, and can process data at a scale that was impossible not long ago.

For data centers, this means a huge shift in scalability and efficiency. For developers, new opportunities to build models with extremely long context or multimodal inputs. And for ordinary users, applications that can understand entire documents, long conversations or videos without losing context.

NVIDIA Rubin is thus not just a technical innovation, but also the foundation for future generations of AI – from industrial solutions to everyday tools that we will all use.